Embedding Koopman Optimal Control in Robot Policy Learning

Hang Yin, Michael C. Welle, and Danica Kragic

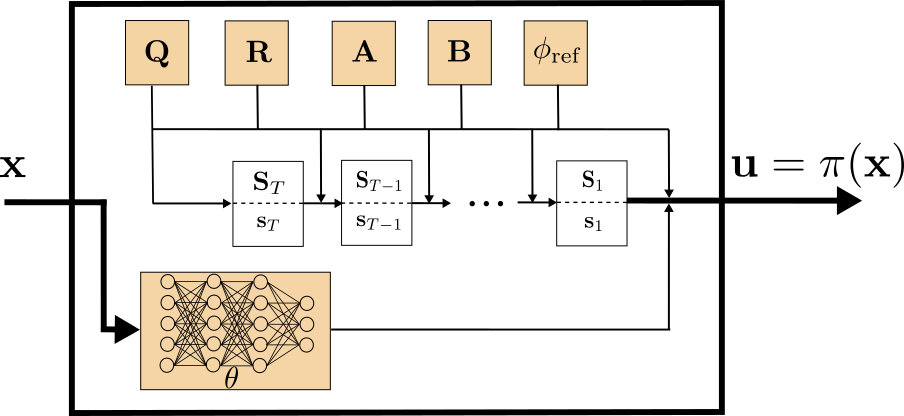

Embedding an optimization process has been explored for imposing efficient and flexible policy structures. Existing work often build upon nonlinear optimization with explicitly iteration steps, making policy inference prohibitively expensive for online learning and real-time control. Our approach embeds a linear-quadratic-regulator (LQR) formulation with a Koopman representation, thus exhibiting the tractability from a closed-form solution and richness from a non-convex neural network. We use a few auxiliary objectives and reparameterization to enforce optimality conditions of the policy that can be easily integrated to standard gradient-based learning. Our approach is shown to be effective for learning policies rendering an optimality structure and efficient reinforcement learning, including simulated pendulum control, 2D and 3D walking, and manipulation for both rigid and deformable objects. We also demonstrate real world application in a robot pivoting task.

Download Paper